In this article, we will explore the key advantages of using Kubernetes compared to VMs, including improved scalability, flexibility, resource utilization, reliability, and security. We will also discuss the considerations involved in setting up and maintaining a Kubernetes environment, including the use of GitOps for managing and deploying applications. By understanding the benefits and challenges of using Kubernetes, organizations can make informed decisions about whether it is the right platform for their needs.

TL;DR

Kubernetes is a tool for managing and deploying applications, with the goal of improving reliability, security, and scalability. It includes features such as self-healing, rolling updates, and automatic failover to help ensure the availability of applications. Kubernetes also offers mechanisms such as replication controllers and liveness and readiness probes to improve the reliability of pods. These features, along with resource limits and quotas, can help to ensure that applications are always available and running at optimal capacity.

It is common to use Git and a GitOps workflow as a central source of truth in a Kubernetes environment, where application configurations and deployment manifests are stored in a Git repository and changes are made using pull requests. Tools such as Argo CD and Flux can be used to automate the deployment process in a GitOps workflow on top of Kubernetes.

In contrast, virtual machines (VMs) require manual intervention to scale and may not offer the same level of flexibility and optimization options. Kubernetes can also provide improved resource utilization compared to VMs, as containers are more lightweight and can be packed more efficiently onto a single host. In addition, Kubernetes allows for the deployment of applications across multiple environments using the same configuration and offers role-based access control for fine-grained access control.

Setup and Provisioning

We assume in both variants a strict GitOps-based setup or provisioning configuration.

GitOps is a workflow for managing and deploying applications using Git as a central source of truth. In a GitOps workflow, application configurations and deployment manifests are stored in a Git repository, and changes to these configurations are made using pull requests. This allows for version control and the ability to track changes to the application over time.

Provisioning, or the initial setup and configuration of infrastructure and applications, is an important consideration in the lifecycle of any system. However, it is also important to consider continuous maintenance and upgrades in order to ensure that the system remains reliable, secure, and up-to-date. This includes tasks such as patching and updating systems, monitoring for and addressing issues and implementing security controls. Properly managing these ongoing tasks can help improve the overall performance and security of the system.

The GitOps toolchain for setting up virtual machines (VMs) using configuration management tools such as Ansible and Puppet, or using infrastructure as code tools like Terraform, may vary. However, the initial effort to establish the necessary infrastructure and configurations is likely to be similar. One advantage of using Terraform is that it allows us to define the infrastructure as code, which can make it easier to replay or migrate the setup to another project or instance. On the other hand, ansible and puppet are popular options for automating the initial setup process of VMs. Regardless of the toolchain used, it is important to consider not only the initial provisioning, but also the ongoing maintenance and upgrades required to ensure the reliability, security, and up-to-date status of the system.

Continuous update and upgrade process

Kubernetes is released every three months and upgrades are typically implemented in a defined way. Using a Kubernetes-optimized operating system can reduce the attack surface and maintenance effort, as these systems are designed to support the specific needs of a Kubernetes deployment. Many cloud providers offer their own Kubernetes-optimized operating systems, which often come with built-in upgrade workflows that can be automated or performed manually.

In contrast, VMs using a dedicated Linux operating system requires continuous upgrades to ensure security and reliability. This process typically involves a sysadmin or operator monitoring security reports for critical issues and applying updates as needed. There are also tools such as Puppet and unattended-upgrades that can help automate this process.

In Kubernetes, system library updates are typically handled at the container level. By properly implementing and maintaining a tool such as Renovate and using GitHub security checks, critical security issues can be addressed as part of a continuous vulnerability scanning process. This helps to ensure that the system remains secure and up-to-date.

Scalability

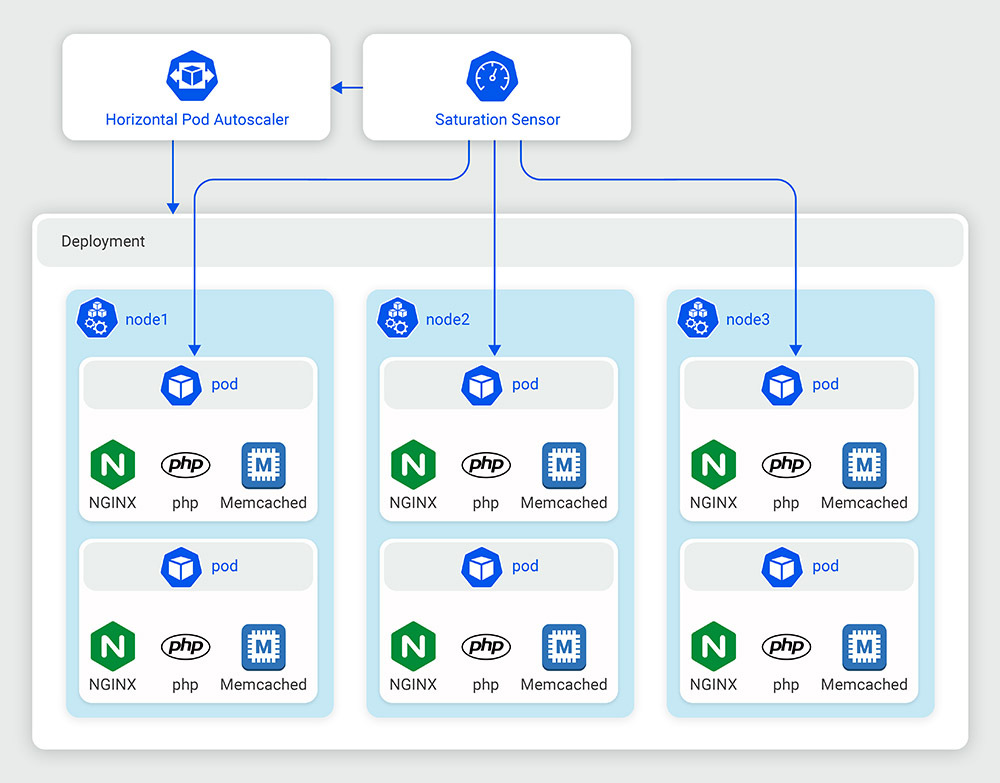

When it comes to scalability, there is a significant difference between virtual machines (VMs) and a Kubernetes cluster. VMs require manual intervention to scale, as an operator must set up and configure new VMs, adjust network configurations, and manage load balancing. This process can be time consuming and may impact the system’s performance. In contrast, Kubernetes allows for fine-grained control over the scaling of individual components within the system. The scheduler will automatically handle application scaling, and in conjunction with cloud node scaling implementations, the system can add or remove resources such as CPU cores and memory on the fly without affecting performance or causing downtime. This provides a great deal of flexibility and optimization options for adapting to changing resource requirements.

Flexibility

In addition to scaling flexibility, container technology provides developers with the ability to shift left and deploy an application together with its environment, including all dependencies and version constraints, without affecting the operating system layer. This allows development teams to package everything into an immutable container image, without having to worry about the underlying system. In a decentralized software environment where each component can be scaled independently, this is a significant advantage over VMs, which enforce global version constraints and can be affected by dependency upgrades on other running applications. In Kubernetes, workloads can live and be transferred between nodes, even during a node reboot. This is in contrast to VMs, which are unreachable during a reboot and can impact the availability of the system. The ability to seamlessly move workloads between nodes can improve the reliability and uptime of the system as a whole.

Resource optimization

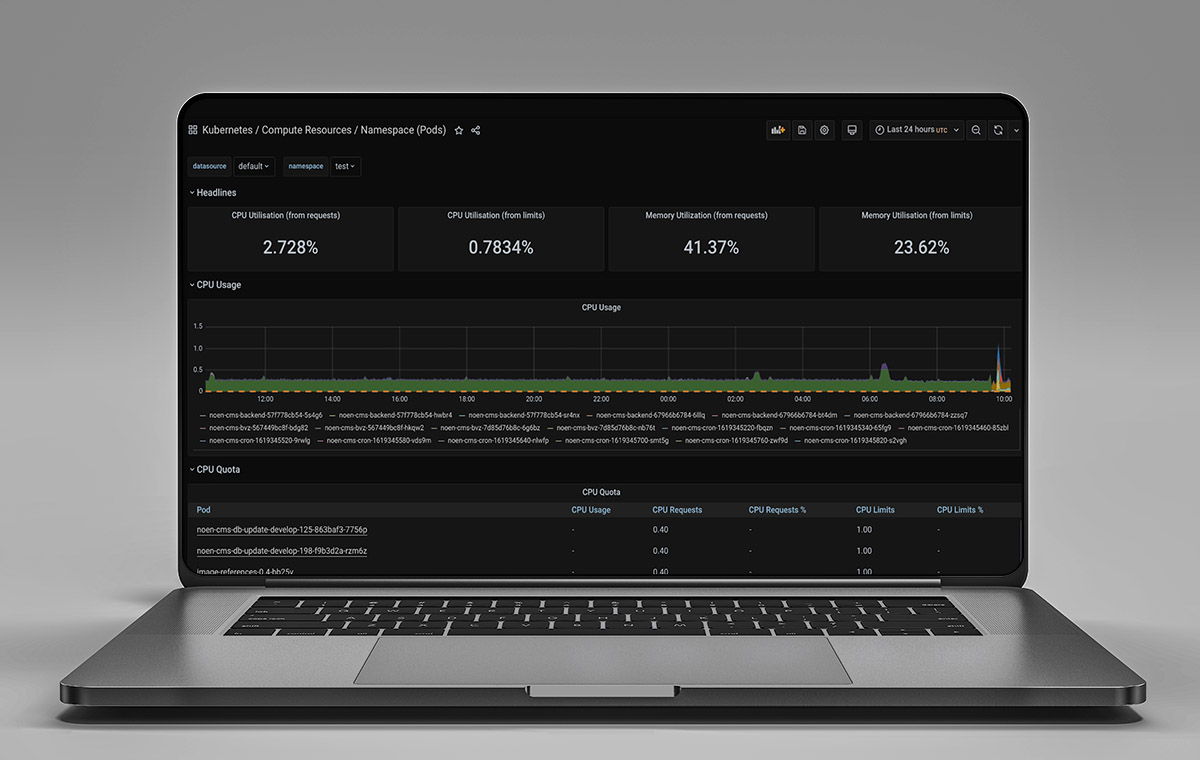

One of the main benefits of using containers is their efficient resource utilization. Because containers share the host operating system and its resources, they are more lightweight than virtual machines, allowing us to pack more containers onto a single physical or virtual host. Kubernetes, with the right configuration, can further optimize resource utilization within the cluster by intelligently distributing workloads across nodes. Together with the scalability features, this enables the system to quickly respond to changes in demand and ensures that the applications are always running at optimal capacity.

At STRG, we take this a step further by combining multiple stages (e.g. integration, staging, and production) into a single cluster. This allows us to reduce resource waste while maintaining the necessary performance constraints for each stage. With the right configuration, we are able to prevent side effects from integration or staging workloads on production or other critical components.

Improved reliability

Kubernetes includes features such as self-healing, rolling updates, and automatic failover that help to ensure the availability of applications. In addition, Kubernetes offers mechanisms such as replication controllers and liveness and readiness probes to improve the reliability of pods. These features, along with resource limits and quotas, can help to ensure that applications are always available and running at optimal capacity.

In an enterprise environment, maintaining high uptime is crucial. By using Kubernetes and leveraging its reliability features, the availability of applications can be increased and the system’s overall performance can be improved.

Security

There are several situations where Kubernetes may be the better choice for security compared to virtual machines. One such situation is when we are running a large number of applications or services that require isolation from each other. In this case, Kubernetes allows us to run each application in its own isolated environment, called a “pod”, which can provide an additional layer of security.

Another situation where Kubernetes may be the better choice is when we need to quickly scale up or down to meet changing demand. Kubernetes provides the ability to easily scale up or down the number of replicas of an application, which can be useful in situations where we need to respond to changes in demand. This can be especially useful in environments where security is a top priority, as it allows us to effectively allocate resources to meet the needs of the applications.

In addition, Kubernetes may be the better choice when we need to deploy applications across multiple environments. Kubernetes provides the ability to deploy applications across multiple environments, such as development, staging, and production, using the same configuration. This can help to ensure that applications are deployed consistently across all environments, which can be useful in securing applications.

Finally, Kubernetes may be the better choice when we need to implement fine-grained access controls. Kubernetes provides role-based access control (RBAC), which allows us to define granular access controls for different users and groups within the system. This can be useful in environments where we need to implement strict security controls to ensure that only authorized users have access to certain resources.

by Nils Müller

- Call STRG's CEO Jürgen, or write a mail juergen.schmidt@strg.at Mobile: +43 699 1 7777 165