- Tobias Kietreiber

- connect

Ever since the historic defeat of top Go player Lee Sedol by Google DeepMind’s computer program, the power of Reinforcement Learning has become apparent to both researchers and industry alike. From mastering ancient board games to conquering virtual battlegrounds in StarCraft II and Dota II, Reinforcement Learning has showcased its ability in tackling complex tasks previously deemed exclusive to human intelligence. However, beneath the surface of these achievements lies the stark reality of the lengthy training processes involved, and while it can be successfully deployed for easier tasks, it becomes apparent that this power is still a long way from being accessible for a broad range of more complex real-world tasks.

Fortunately, many of those tasks are performed very well by human experts. In this blog post, we will explore the core ideas of a method called “Imitation Learning” to harness the knowledge these experts possess to teach programs that can match human performance very closely.

Environment Setting

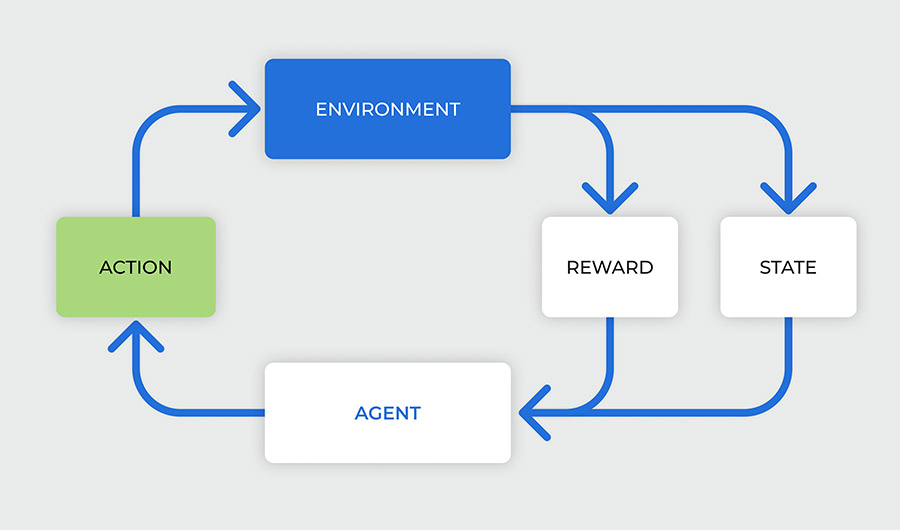

Let’s first shortly familiarize ourselves with the setting underlying Reinforcement Learning, which is best described by the above picture: An “agent” is placed in an environment at a specific state (e.g. a car placed on a road, where the state tells the agent about its speed and position) where it can take some actions which take the environment to another state (e.g. stepping on the gas to accelerate) and also give some reward (e.g. for staying in the bounds and respecting speed limits). The agent then tries to take action in a way to get as much reward as possible. See also this previous blog post for a more detailed explanation.

In Imitation Learning, we let a human demonstrate his/her expertise on a task while recording every step. So in the example above, we would ask a particularly good driver to go around the city, while recording the states as camera and sensor data, and the actions as steering and throttle inputs.

Behavioral Cloning in Machine Learning

This brings us to the first – very simple yet still often effective – approach to Imitation Learning: In an ideal replica of the environment, we could replay the experts’ actions and get the same performance, so we can train a deep neural net to react to new situations with the same action as that of the human demonstrator. Of course, the reality is not perfect, the street the expert drove down yesterday might be wet from rain today, the road might be more jammed as there is a concert on the weekend, or we simply might want to drive in an entirely different country. Also, all machine learning models have some inaccuracy, leading the agent to ever so slightly drift off the experts’ demonstrated path.

All this leads to the agent having increasing difficulty taking the same paths as the expert, until eventually it ventures too far off and cannot solve the task anymore. So, for example, an autonomous vehicle trained by behavioral cloning would start off driving very close to the human path. But then, for example, the road is not as congested as it was when the human was driving, so the agent drives a little faster than the human, which causes it to veer off the road a little on the next bend. But if this occurs a couple of times, the vehicle will go off the road further and further until it cannot recover anymore and just drives off. This is schematically illustrated in the animation below.

Additionally, let’s not forget that in complex, real-world environments humans might not act consistently, for example you might drive a little faster down a road if you have an appointment compared to going shopping. This also creates additional difficulty for direct imitation.

Leveraging Reinforcement Learning

But Reinforcement Learning comes to the rescue! In modern Imitation Learning methods, instead of training a model to just take actions that are similar to a human, a reward is given for acting like the human demonstrator and punishing it for acting very differently. This reward can then be used to nudge the agent back in the right direction once it starts diverging and diverges from the learned path.

So in our example from above, once the agent drives off the road too much, it receives small rewards, but also realizes that driving closer to the center of the road will eventually increase its reward again, which lets the agent find its path back. This is illustrated in the schematic animation below.

IQ-Learn on Robomimic-Can task with a 6 DOF arm learning to pick and place cans in the correct bin (using 30 expert demos). Source: The Stanford AI Lab

Indeed, learning a reward instead of just blindly trying to follow a human path incorporates a lot more of the knowledge and reasoning behind human behavior. To further illustrate how powerful this method can be even for very complex environments, below are examples from a blog post from the authors of IQ-Learn, the currently most powerful Imitation Learning algorithm, which also uses Inverse Reinforcement Learning. As you can see, even from as little as 20-30 demonstrations from experts, an agent can achieve great performance.

IQ-Learn on Minecraft solving the Create Waterfall task (using 20 expert demos).Source: The Stanford AI Lab

Driving Business Growth with Imitation Learning

By harnessing the power of imitation, businesses stand to unlock lucrative opportunities. From autonomous vehicles to personalized recommendation systems, the ability to replicate human expertise at scale holds immense potential for profit and innovation. Moreover, as algorithms like IQ-Learn demonstrate, even with a modest number of demonstrations, remarkable performance can be achieved.

In a collaborative effort, STRG and FH St. Pölten with the support of the FFG (Die Österreichische Forschungsförderungsgesellschaft) are currently exploring the power of Reinforcement- and Imitation Learning in the context of online web portals in a locally funded Austrian research project called AGENTS.

Interested in finding out more?

Our recently published research paper “Bridging the Gap: Conceptual Modeling and Machine Learning for Web Portals” explores the intersection of conceptual modeling and machine learning to analyze the user journey of online shopping portals.

Keep reading!Meet Tobias Kietreiber, STRG Guest Author

He began his academic pursuits studying technical mathematics, eventually earning a master's degree from the prestigious Technical University of Vienna. This solid foundation paved the way for his successful transition into the software engineering field. Currently, Tobias is a researcher at the University of Applied Sciences St. Pölten (FH St. Pölten), where he researches fascinating ways of advancing machine learning methodologies and their applications. One of his most notable recent projects involves imitation learning in car racing, a sophisticated form of artificial intelligence that enables machines to mimic human actions. His work was showcased at the recent SAINT (Social Artificial Intelligence Night) event, highlighting his contributions to the field.

We are thrilled to have Tobias share his insights in this blog post. His expertise in imitation learning, an exciting subfield of machine learning, opens up innovative ways to create intelligent systems. We are delighted to collaborate with him and look forward to more groundbreaking work together in the future.